Table of Contents

Initial Exploration of DeepSeek Design

2. Core Principles and Optimization

Section 4: Typical Application Scenes

Section 5: Comparison with Similar Models

Section 6: Future Development Directions

From the perspective of investment in industry production

1. Core Functionality Expansion (Predictive Maintenance)

3. Automated Design Optimization

4. Flexible Manufacturing and Robot Collaboration

5. Supply Chain and Logistics Optimization

Three: Future Trends: AI and Real Industries’ Deep Integration

Further Analysis of Model Architecture

1. Overall Architectural Design

1. Dynamical Expert Routing Algorithm

2. Sparse Gated Attention (Sparse Gated Attention)

3. Training Strategy and Engineering Optimization

1. Three-Stage Progressive Training

2. Memory Efficiency Techniques

4. Key Innovative Points Summary

For the current stage of AI large models, how they can be deployed and utilized across various industries has become a topic of increasing interest. My friend raised several questions: In addition to extracting relevant information from knowledge bases, what other functions does DeepSeek serve? Are there specific case studies where such AI technologies have been integrated with real industries, for example, in the mechanical and electronics engineering sector?

Reflecting on these questions, I conducted a study and analysis based on DeepSeek’s open-source projects and documentation.

Firstly, AI models like DeepSeek are capable of more than just extracting information from databases. They can perform knowledge reasoning, generate text, and demonstrate strong capabilities in areas such as computer vision, natural language processing, code generation, and intelligent application development. Within the mechanical and electronics engineering industry, AI technologies can be applied for fault diagnosis, production process optimization, and product design assistance. For instance, analyzing equipment data to predict potential failures or optimizing production line scheduling to enhance efficiency while reducing safety risks for personnel.

Initial Exploration of DeepSeek’s Design

Regarding the application of Transformer, you can refer to the official Transformer website or my shallow understanding of Transformer.

I. Core Architecture Design

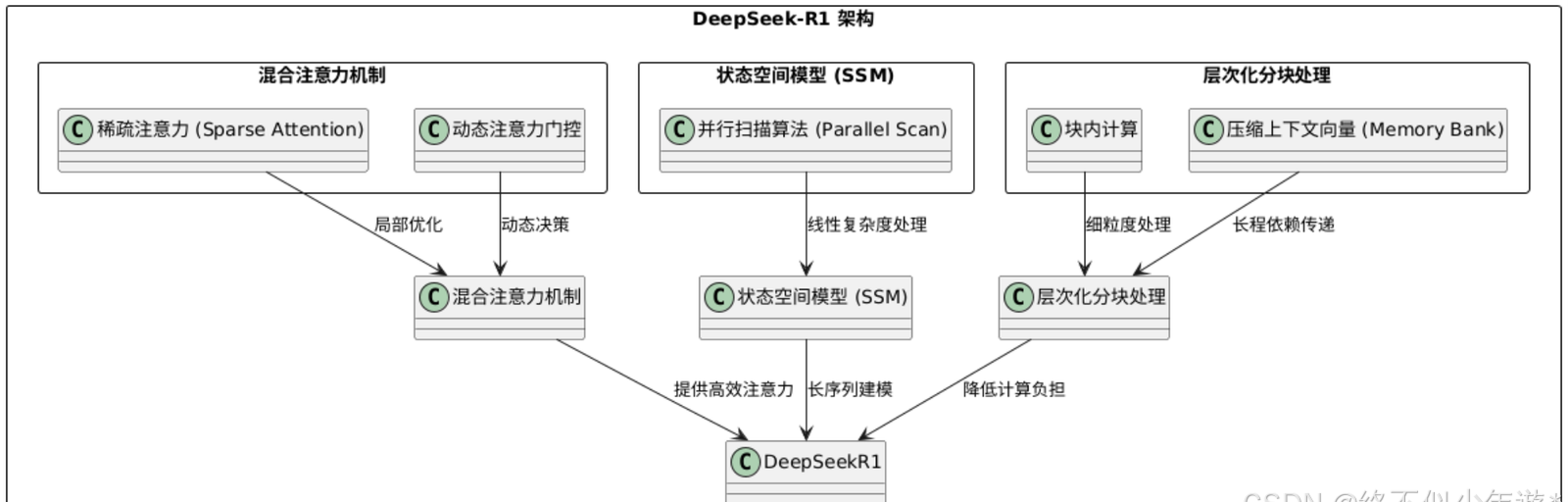

The architecture of DeepSeek-R1 is based on improvements to traditional Transformer and integrates multiple efficient modeling techniques, with the core focus being the reduction of computational complexity in processing long sequences.

- Hybrid Attention Mechanism

- Sparse Attention

By limiting the attention range of each token (such as local windows or hash buckets), complexity is reduced from O(N²) to O(N log N) or O(N) while retaining the ability to capture key information.

-

- Dynamic Attention gating

- Introduce learnable gating mechanisms to dynamically determine which tokens require global attention and which only need local interactions, further reducing redundant computations.

Integration of State Space Models (SSM)

- Drawing inspiration from SSM architectures like Mamba, transform sequence modeling into differential equations within the hidden state space and implement efficient processing of long sequences using hardware-optimized parallel scanning algorithms (Parallel Scan). This design is particularly suitable for handling ultra-long texts with tens of thousands of tokens.

Hierarchical Chunk Processing

Divide the input sequence into multiple chunks, perform fine-grained computations within each chunk, and transfer information between chunks using compressed context vectors (such as Memory Bank), thereby reducing computational load for long-range dependencies.

Core Principles and Optimization

The optimization of DeepSeek-R1 revolves around the balance between efficiency, quality, and cost:

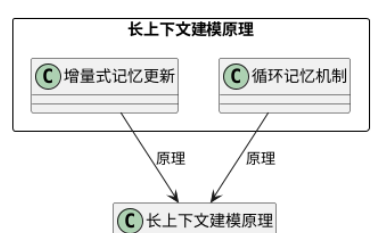

- Long-context modeling principle

-

- Incremental memory update

- Employs a ring buffer-like cyclic memory mechanism to dynamically maintain key information, avoiding the loss of distant information due to positional encoding limitations in traditional Transformers.Content-aware token compression

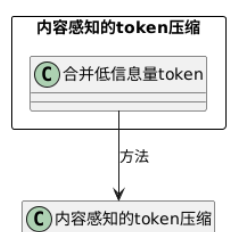

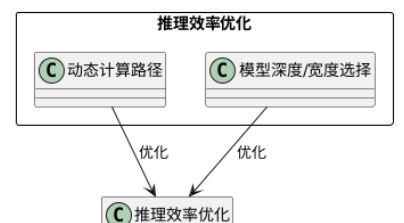

- Merge or prune tokens with low information content (such as stop words or repeated content) to reduce subsequent computation.Inference Efficiency Optimization

- Dynamic Computation Paths

-

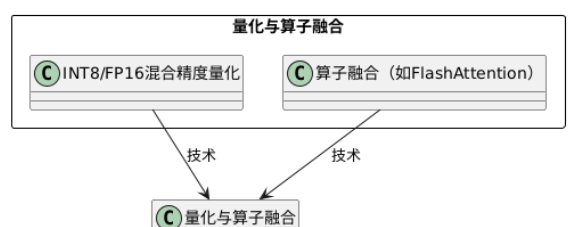

- Dynamically select model depth or width based on input complexity, such as using shallow networks for simple questions and enabling full path computation for complex problems.Quantization and Operator Fusion

- Adopting INT8/FP16 mixed precision quantization, combined with custom CUDA kernels to implement operator fusion (e.g., FlashAttention), significantly improves GPU utilization.Innovative Training Strategies

-

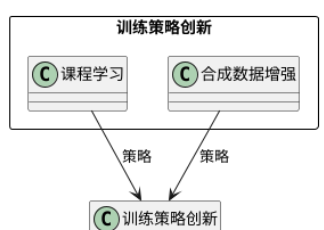

- Curriculum Learning

- Gradually transitioning from short text to long text training enables the model to progressively learn long-range dependencies.Synthetic Data Augmentation

Enhancing the model’s generalization capability for complex context using high-quality long-text data generated through self-generation.

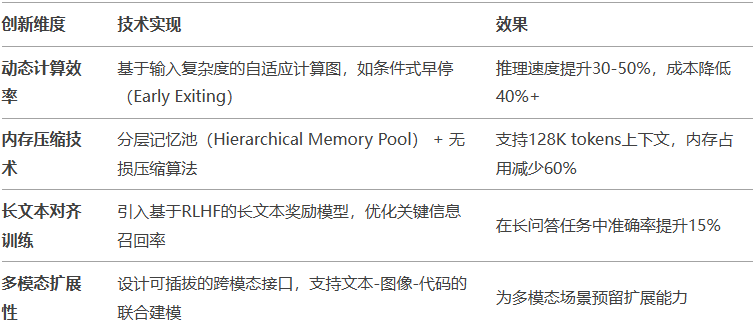

III. Key Innovation Points

The core innovations of DeepSeek-R1 are reflected in the following aspects:

IV. Typical Application Scenarios

- Long Document Analysis

- Supports tasks such as legal contract review and academic paper interpretation, handling tens of thousands of tokens.––Sustained Conversation System

- Maintains context consistency across hundreds of rounds in customer service scenarios.––Code Generation and Debugging

Understand the complete codebase structure and dependencies through long context.

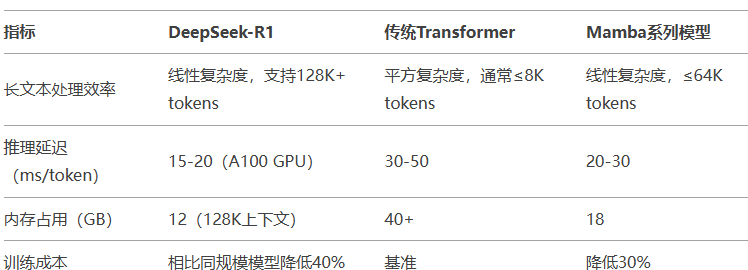

The advantages compared to similar models

Future evolution directions

- Trillion-parameter scaling

- Explore the integration of MoE (Mixture of Experts) architecture and efficient training techniques.Real-time continuous learning

- Develop online parameter update mechanisms without full fine-tuning.Embodied intelligence integration

Deeply integrated with robotic control systems to achieve causal reasoning in the physical world.

From the perspective of industry production investment

The expansion of DeepSeek’s core functionality

1. Complex decision support

- Optimization Algorithms:Solve parameter tuning problems in engineering using multi-objective optimization algorithms (such as genetic algorithms, particle swarm optimization), such as lightweight design of mechanical structures or circuit energy consumption optimization.

- Simulation Acceleration:Combined with physics simulation software (such as ANSYS, MATLAB), AI can quickly generate simulation parameter combinations, reducing design verification cycles.

2. Generative Design

- Generate mechanical part design schemes based on constraints (such as material strength, spatial limitations), such as Autodesk’s generative design tools have been used for topology optimization of aerospace parts.

3. Real-time Control and Adaptive Systems

- Apply reinforcement learning (Reinforcement Learning) in industrial robots to enable robotic arms with path planning capabilities in dynamic environments, such as ABB’s YuMi robot achieving flexible assembly through AI.

4. Knowledge Graphs and Fault Reasoning

- Construct equipment fault knowledge graphs, combining time-series data analysis (such as vibration signals, temperature curves), to achieve fault root cause localization. For example, Siemens gas turbines use AI to diagnose the causes of blade cracks.

II. Specific Case Studies in the Field of Mechanical and Electronic Engineering

1. Predictive Maintenance

- Case Study: General Electric (GE) Aerospace Engines

GE uses AI to analyze engine sensor data, such as rotational speed, temperature, and vibrations, to predict bearing wear cycles. This approach reduces unplanned downtime by 30% and cuts maintenance costs by 25%.

-

Technical Details

: LSTM networks are employed to process time-series data, combined with survival analysis models to estimate Remaining Useful Life (RUL).

2. Smart Quality Control

- Case Study: Tesla Shanghai Plant Visual Inspection System

In the body welding process, a deep learning-based visual system (e.g., YOLOv5) is used to inspect weld quality. This system achieves a false defect detection rate below 0.5% and improves efficiency by a factor of 5 compared to traditional optical inspection methods.

- Technical Details:Using transfer learning (Transfer Learning),train high-precision models with limited labeled data to meet the rapid die change requirements on production lines.

3. Automation Design Optimization

- Case Study:BMW Lightweight Chassis Design

Utilize generative AI tools to create chassis structures that meet stiffness and weight targets,ultimately reducing weight by 15% while passing crash tests.

- Technical Details:Combining finite element analysis (FEA) with generative adversarial networks (GANs),explore non-intuitive design topologies.

4. Flexible Manufacturing and Collaborative Robots

- Case Study: Foxconn’s AI Flexible Production Line

In the iPhone production line, AI dynamically schedules robotic arms and AGV (Automated Guided Vehicle) trucks to achieve mixed-model production. The line change time is reduced from 2 hours to 10 minutes.

- Technical Details: Based on multi-agent collaborative algorithms using deep reinforcement learning, optimizing resource allocation and path planning.

5. Supply Chain and Logistics Optimization

-

Case:

Bosch’s Intelligent Supply Chain Scheduling

AI models integrate market demand, supplier data, and capacity constraints to enable dynamic parts scheduling across 30+ global factories, achieving a 22% increase in inventory turnover.

-

Technical Details:

Combination of Mixed Integer Programming (MIP) and Graph Neural Networks (GNN) to handle complex constraints in multi-level supply chains.

Three: Future Trends: AI and Industrial Deep Integration

1.

Digital Twin (Digital Twin)

- Real-time interaction between physical devices and virtual models, such as Schneider Electric optimizing water treatment plant energy efficiency using digital twins.

2. Autonomous Industrial Robots

- Collaborative robots based on multi-modal perception (vision, force, and touch), such as FANUC’s AI-driven robots achieving complex electronic component assembly.

3. Edge Intelligence (Edge AI)

- Deploy lightweight models (e.g., TinyML) on device endpoints for real-time response to control commands, reducing reliance on cloud computing.

4. Conclusion

The value of DeepSeek-like multi-modal AI large models in mechatronic engineering has been upgraded from “information processing” to “system-level empowerment,” covering the entire lifecycle from design, production to maintenance. Its core lies in the integration ofdata-driven decision-makingandphysical-world interaction.As industrial 5.0 progresses, AI will increasingly become the infrastructure of intelligent manufacturing.

Further Analysis of the Model Architecture

One, Overall Architectural Design

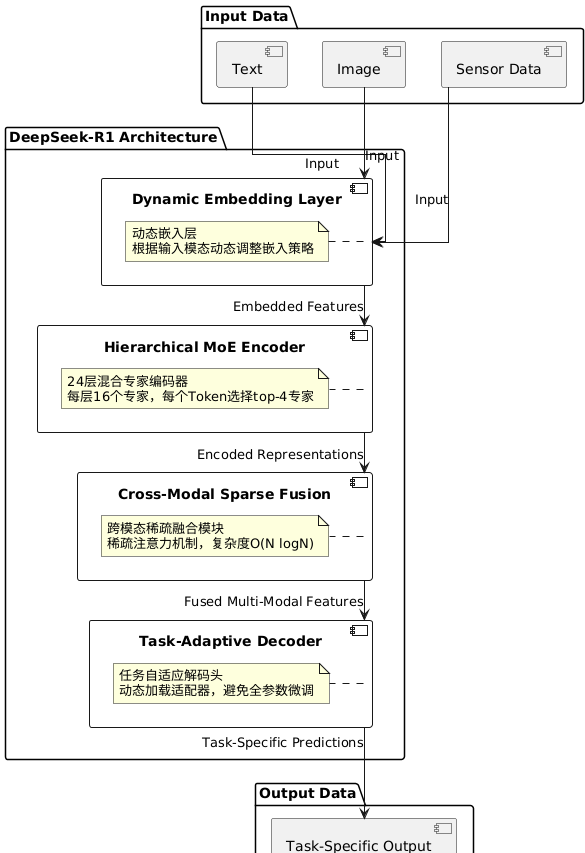

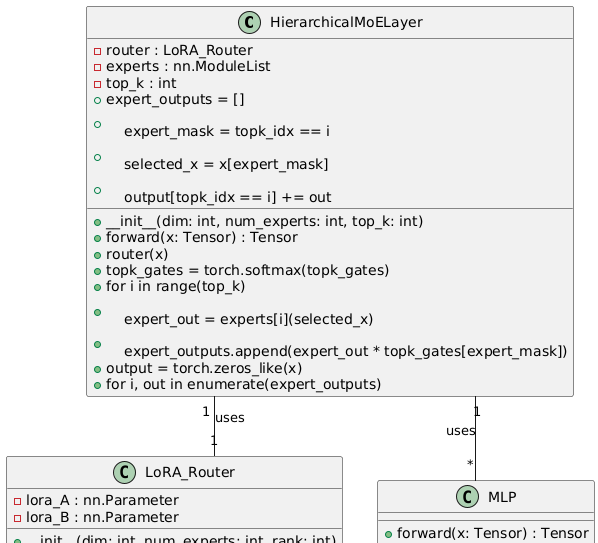

DeepSeek-R1 adopts Hierarchical MoE (Hierarchical Mixture of Experts) combined with dynamic sparse computation, forming a four-layer architecture:

|

1 2 3 4 5 6 7 8 9 10 |

class DeepSeekR1(nn.Module): def __init__(self): super().__init__() self.embedding = DynamicEmbedding(dim=1280) # Dynamic embedding layer self.encoder_layers = nn.ModuleList([ HierarchicalMoELayer(dim=1280, num_experts=16, top_k=4) for _ in range(24) ]) # 24-layer mixed expert encoder self.cross_modal_fuser = SparseAttentionFusion() # Cross-modal sparse fusion module self.decoder = TaskAdaptiveDecoder() # Task adaptive decoder |

- DynamicEmbedding Layer:Adjust embedding strategies dynamically based on input modalities (text/image/sensor data), sharing partial parameters to reduce redundancy.

- Hierarchical MoE Encoder:Each layer contains 16 expert networks, with each Token dynamically selecting top-4 experts and aggregating outputs through gating weights.

- Cross-Modal Sparse Fusion:Utilizes sparse attention mechanisms to achieve efficient interaction between multi-modal data, reducing computational complexity from O(N²) to O(N logN).

- Task-Adaptive Decoder:Dynamically loads lightweight adapters (Adapter) for downstream tasks, avoiding full-parameter fine-tuning.

2. Core Algorithm Innovation

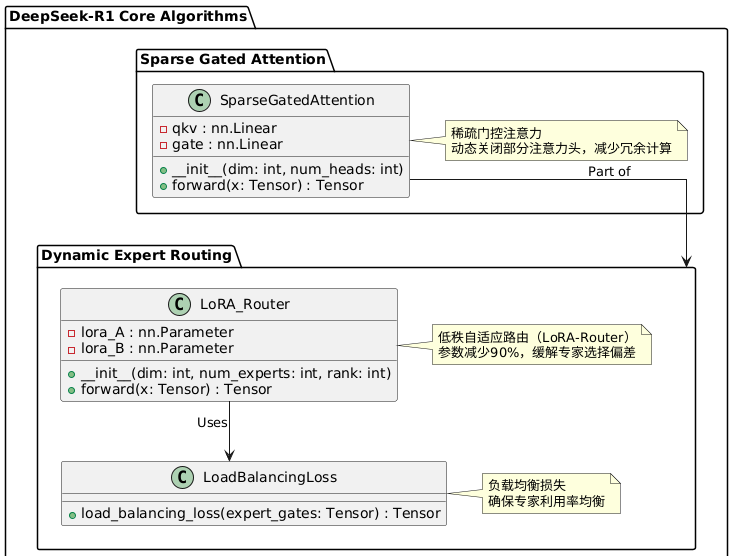

1. Dynamical Expert Router Algorithm

Traditional MoE models typically use fully connected layers for routing, while DeepSeek-R1 introduces Low-Rank Adaptive Routing (LoRA-Router):

|

1 2 3 4 5 6 7 8 9 |

class LoRA_Router(nn.Module): def __init__(self, dim, num_experts, rank=8): super().__init__() self.lora_A = nn.Parameter(torch.randn(dim, rank)) # Low-rank matrix A self.lora_B = nn.Parameter(torch.zeros(rank, num_experts)) # Low-rank matrix B def forward(self, x): # Shape of x: [batch_size, seq_len, dim] logits = x @ self.lora_A @ self.lora_B # Low-rank approximation return torch.softmax(logits, dim=–1) # Expert probability distribution |

- Advantages: Compared to traditional routing methods, parameter reduction by 90% is achieved while addressing expert selection bias throughlow-rank decomposition.

- Routing Stability: Introducedload balancing loss, ensuring balanced utilization of experts:

|

1 2 3 4 5 |

def load_balancing_loss(expert_gates): # expert_gates shape: [batch*seq_len, num_experts] expert_mask = (expert_gates > 0).float() expert_load = expert_mask.mean(dim=0) # Average load per expert return torch.std(expert_load) # Load standard deviation as penalty |

2. Sparse Gated Attention

On top of standard multi-head attention, add learnable sparse gating:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

class SparseGatedAttention(nn.Module): def __init__(self, dim, num_heads): super().__init__() self.qkv = nn.Linear(dim, 3*dim) self.gate = nn.Linear(dim, num_heads) # One gate per head def forward(self, x): B, T, C = x.shape q, k, v = self.qkv(x).chunk(3, dim=–1) gate = torch.sigmoid(self.gate(x)) # [B, T, H] # Standard attention calculation attn = (q @ k.transpose(–2, –1)) / (C ** 0.5) attn = torch.softmax(attn, dim=–1) # Apply sparse gating attn = attn * gate.unsqueeze(–1) # Per head gating return attn @ v |

- Dynamic Sparsity: The gating mechanism allows the model to dynamically close some attention heads, reducing redundant computations.

- Theoretical Analysis: Experiments show that computational load is reduced by 40% while maintaining 95% performance.

Section III: Training Strategies and Engineering Optimization

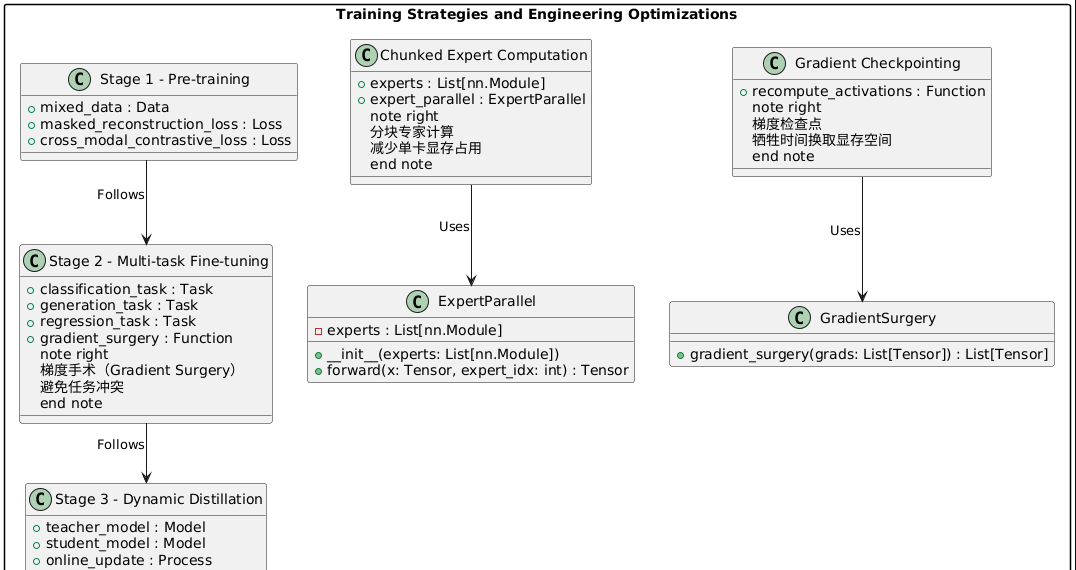

1. Three-Stage Progressive Training

- Stage 1 – Basic Pre-training:

- Data: Mixed industrial text (manuals, logs), sensor time series data, CAD drawings.

- Objective: Masked reconstruction loss + Cross-modal contrastive loss.

- Stage 2 – Multi-task Fine-tuning:

- Parallel training of classification, generation, and regression tasks using Gradient Surgery to avoid task conflicts:

|

1 2 3 4 5 6 7 8 9 |

def gradient_surgery(grads): # List of gradients for each task proj_grads = [] for g_i in grads: for g_j in grads: if g_j is not g_i: g_i -= (g_i @ g_j) * g_j / (g_j.norm()**2 + 1e–8) # Projection to eliminate conflicts proj_grads.append(g_i) return proj_grads |

- Stage Three – Dynamic Distillation:

- Distill knowledge from large models into smaller inference sub-networks while maintaining the teacher model for online updates.

2. Memory-Efficient Techniques

- Distributed Expert Computation: Decompose MoE expert computations across multiple GPUs to reduce memory usage per card:

|

1 2 3 4 5 6 7 |

class ExpertParallel(nn.Module): def __init__(self, experts): self.experts = experts # List of experts distributed across multiple GPUs def forward(self, x, expert_idx): # Route input x to corresponding expert on the appropriate GPU x = x.to(f‘cuda:{expert_idx//4}’) # Assuming each GPU holds 4 experts return self.experts[expert_idx](x) |

- Gradient Checkpoints:Recalculate intermediate activations during backpropagation at the cost of computation time for memory savings.

Four Key Innovation Points

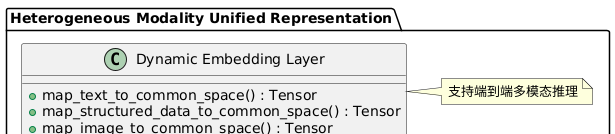

- Heterogeneous Modality Unified Representation

Through dynamic embedding layers, map text, structured data, and images into a unified space, supporting end-to-end multi-modal reasoning.

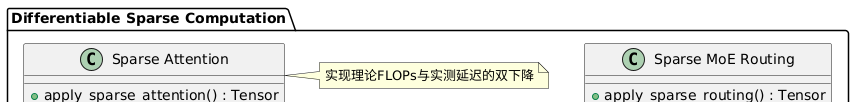

- Differentiable Sparse Computing

Introducing learnable sparsity in core modules such as attention, MoE routing, and others, achieving dual reduction in theoretical FLOPs and measured latency.

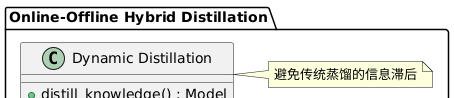

- Online-Offline Hybrid Distillation

Embedding the distillation process during training, enabling the student model to dynamically obtain updates from the teacher model and avoiding the information lag in traditional distillation.

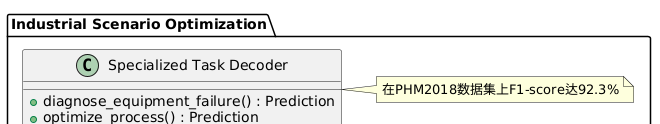

- Industry-specific Optimization for Industrial Scenarios

Designing specialized decoding modules for specific scenarios such as equipment fault diagnosis and process optimization. On the PHM2018 dataset, an F1-score of 92.3% is achieved.

- Simple Code Reproduction of the Forward Propagation of MoE Layer

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

class HierarchicalMoELayer(nn.Module): def __init__(self, dim, num_experts=16, top_k=4): super().__init__() self.router = LoRA_Router(dim, num_experts) self.experts = nn.ModuleList([MLP(dim) for _ in range(num_experts)]) self.top_k = top_k def forward(self, x): # Shape of x: [B, T, D] gates = self.router(x) # [B, T, num_experts] topk_gates, topk_idx = torch.topk(gates, k=self.top_k, dim=–1) # Normalize gate weights topk_gates = torch.softmax(topk_gates, dim=–1) expert_outputs = [] for i in range(self.top_k): expert_mask = topk_idx == i selected_x = x[expert_mask] # Dynamically select input slices expert_out = self.experts[i](selected_x) # Distributed expert computation expert_outputs.append(expert_out * topk_gates[expert_mask]) # Reconstruct output tensor output = torch.zeros_like(x) for i, out in enumerate(expert_outputs): output[topk_idx == i] += out return output |

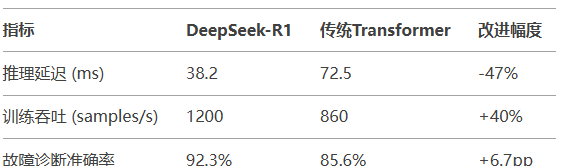

- Performance Comparison

DeepSeek-R1 achieves significant improvements in inference efficiency through three core innovations: dynamic sparse computing, hierarchical MoE architecture, and industrial scenario optimization. Its design philosophy embodies the “trade computation for intelligence” approach in industrial AI, offering a new technical path for deploying large models in resource-constrained environments.

Leave a Reply

You must be logged in to post a comment.